Evolution and the Problem of Others Minds – Jan 24

Elliott Sober*

Department of Philosophy

University of Wisconsin, Madison 53706

ersober@facstaff.wisc.edu

I.

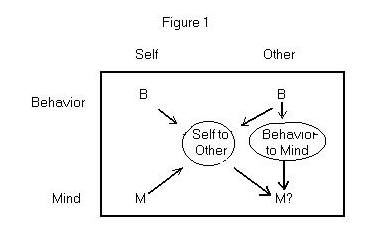

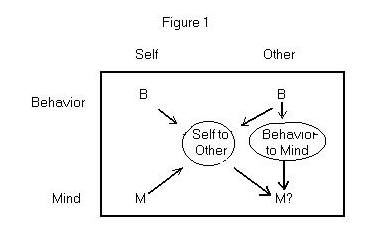

The following diagram illustrates two inference problems. First, there

is the strictly third-person behavior-to-mind problem in which I observe

your behavior and infer that you occupy some mental state. Second, there

is the self-to-other problem, in which I notice that I always or usually

occupy some mental state when I behave in a particular way; then, when

I observe you produce the same behavior, I infer that you occupy the same

mental state. This second inference is the subject of the traditional philosophical

problem of other minds.

How are these problems related? Notice that the inputs to the behavior-to-mind

problem are a subset of the inputs to the self-to-other problem. In the

first, I consider the behavior of the other individual; in the second,

I consider that behavior, as well as my own behavior and mental state.

This suggests that if the self-to-other problem is insoluble because the

evidence available is too meager, the same will be true of the behavior-to-mind

problem as well. Formulated in this way, it makes no sense to think that

inferences from self to other cannot be drawn, but that purely third-person

behavior-to-mind inferences are sound.

I see no reason to think that a purely third-person scientific psychology

is impossible. Of course, if one is a skeptic about all nondeductive

inference, that skepticism will infect the subject matter of psychology.

And if one thinks that scientific inference can never discriminate

between empirically equivalent theories, one also will hold that science

is incapable of discriminating between such theories when their subject

matter is psychological. However, these aren’t special problems

about psychology. What, then, becomes of the problem of other minds? If

that problem concerns the tenability of self-to-other inference, it appears

to be no problem, if science is able to carry out behavior-to-mind

inferences.

To see how the self-to-other problem can be detached from the problem

of strictly

third-person behavior-to-mind inference, we need to distinguish absolute

from incremental versions of the self-to-other problem. The absolute

problem concerns whether certain input information permits me to infer

that the other person occupies mental state M rather than some alternative

state A. As I’ve said, if information about the behavior of others permits

me to infer that they are in mental state M, then it is hard to see why

this inference should be undermined by adding the premiss that I

myself am in mental state M. However, the question remains of whether first-person

information makes a difference. To whatever degree third-person

information provides an indication of whether the other person has M or

A, does the addition of first-person information modify this assessment?

The incremental version of the self-to-other problem is neutral on the

question of whether third-person behavior-to-mind inference is possible.

It is this incremental problem that I think forms the core of the problem

of other minds, and this is the problem that I want to address here.

Although the problem of other minds usually begins with an introspective

grasp of one’s own mental state, it can be detached from that setting and

formulated more generally as a problem about “extrapolation.” Thus, we

might begin with the assumption that human beings produce certain behaviors

because they occupy particular mental states and ask whether this licenses

the conclusion that members of other species that exhibit the behavior

do so for the same reason. However, none of us knows just by introspection

that all human beings who produce a given behavior do so because

they occupy some particular mental state. In fact, this formulation of

the problem of other minds, in which it is detached from the concept of

introspection, is usually what leads philosophers to conclude that inferences

about other minds from one’s own case are extremely weak. The fact that

I own a purple bow tie isn’t evidence that you do too. This point about

bow ties is supposed to show that the fact that I have a mind isn’t evidence

that you do. I know that I own a purple bow tie, but not by introspection.

Discussion of the problem of other minds in philosophy seems to have

died down (if not out) around thirty years ago. Before then, it was discussed

as an instance of “analogical” or “inductive” reasoning and the standard

objection was that an inference about others based on your own situation

is an extrapolation from too small a sample. This problem does not disappear

merely by thinking of introspective experience as furnishing you with thousands

of data points. The fact remains that they all were drawn from the same

urn -- your own mind. How can sampling from one urn help you infer the

composition of another?

When the problem is formulated in this way, it becomes pretty clear

that what is needed is some basic guidance about inductive inference. It

isn’t the mental content of self-to-other inference that makes it

problematic, but the fact that it involves extrapolation. Some extrapolations

make sense and other don’t. It seems sensible to say that thirst makes

other people drink water, based on the fact that this is usually why I

drink. Yet, it seems silly to say that other folks walk down State Street

at lunchtime because they crave spicy food, based just on the fact that

this is what sets me strolling. By the same token, it seems sensible to

attribute belly buttons to others, based on my own navel gazing. Yet, it

seems silly to universalize the fact that I happen to own a purple bow

tie. What gives?

The first step towards answering this question started to emerge in

the 1960's, not in philosophy of mind, but in philosophy of science. There

is a general point about confirmation that we need to take to heart --

observations provide evidence for or against a hypothesis only in the context

of a set of background assumptions. If the observations don’t deductively

entail that the hypothesis of interest is true, or that it is false, then

there is no saying whether the observations confirm or disconfirm, until

further assumptions are put on the table. This may sound like the Duhem/Quine

thesis, but that way of thinking about the present point is somewhat misleading,

since Duhem (1914) and Quine (1953) discussed deductive, not probabilistic,

connections of hypotheses to observations. If we want a person to pin this

thesis to, it should be I.J. Good. Good (1967, 1968) made this point forcefully

in connection with Hempel’s (1965, 1967) formulation of the ravens paradox.

Hempel thought it was clear that black ravens and white shoes both

confirm the generalization that all ravens are black. The question that

interested him was why one should think that black ravens provide stronger

confirmation than white shoes. Good responded by showing that empirical

background knowledge can have the consequence that black ravens actually

disconfirm

the generalization. Hempel replied by granting that one could have special

information that would undercut his assumption that black ravens and white

shoes both confirm. However, he thought that in the absence of such information,

it was a matter of logic that black ravens and white shoes are confirmatory.

For this reason, Hempel asked the reader to indulge in a “methodological

fiction.” We are to imagine that we know nothing at all about the world,

but are able to ascertain of individual objects what colors they have and

whether they are ravens. We then are presented with a black raven and a

white shoe; logic alone is supposed to tell us that these objects are confirming

instances. Good’s response was that a person in that circumstance would

not be able to say anything about the evidential meaning of the observations.

I think that subsequent work on the concept of evidence, both in philosophy

and in statistics, has made it abundantly clear that Good was right and

Hempel was wrong. Confirmation is not a two-place relationship between

observations and a hypothesis; it is a three-place relation between observations,

a hypothesis, and a background theory. Black ravens confirm the generalization

that all ravens are black, given some background assumptions, but fail

to do so, given others. And if no background assumptions can be brought

to bear, the only thing one can say is -- out of nothing, nothing comes.

If we apply this lesson to the problem of other minds, we obtain the

following result:

The fact that I usually or always have mental property M when I perform

behavior B is, by itself, no reason at all to think that you usually

or always have M when you perform B. If that sounds like skepticism, so

be it. However, the inference from Self to Other can make sense, once additional

background assumptions are stated. A nonskeptical solution to the problem

of other minds, therefore, must identify plausible further assumptions

that bridge the inferential gap between Self and Other.

II.

The problem of other minds has been and continues to be important in

psychology (actually, in comparative psychology), except that there

it is formulated in the first person plural. Suppose that when human beings

perform behavior B, we usually or always do so because we have mental property

M. When we observe behavior B in another species, should we take the human

case to count as evidence that this species also has mental property M?

For this problem to be nontrivial, we assume that there is at least one

alternative internal mechanism, A, which also could lead organisms to produce

the behavior. Is the fact that humans have M evidence that this other species

has M rather than A?

Discussion of this problem in comparative psychology has long been dominated

by the fear of naive anthropomorphism (Dennett 1989, Allen and Bekoff 1997).

C. Lloyd Morgan (1903) suggested that if we can explain a nonhuman organism’s

behavior in terms of a “higher” mental faculty, or in terms of a “lower”

mental faculty, that we should prefer the latter explanation. Morgan’s

successors generally liked Morgan’s canon because of its prophylactic qualities

-- it reduces the chance of a certain type of error. However, it is important

to recognize that there are two types of error that might occur

in this situation:

|

O lacks M |

O has M |

| Deny that O has M |

|

type-2 error |

| Affirm that O has M |

type-1 error |

|

Morgan’s canon does reduce the chance of type-1 error, but that isn’t

enough to justify the canon. By the same token, a principle that encourages

anthropomorphism would reduce the chance of type-2 error, but that wouldn’t

justify this liberal principle, either.

Morgan tried to give a deeper defense of his canon. He thought he could

justify it on the basis of Darwin’s theory of evolution. I have argued

elsewhere that Morgan’s argument does not work (Sober 1998b). However,

it is noteworthy that Morgan explicitly rejects what has become a fairly

standard view of his principle -- that it is a version of the principle

of parsimony. Morgan thought that the simplest solution would be

to view nonhuman organisms as just like us. The canon, he thought, counteracts

this argument from parsimony. As we will see, Morgan’s view about the relationship

of his canon to the principle of parsimony was prescient.

I now want to leave Morgan’s late-nineteenth century ideas about comparative

psychology behind, and fast forward to the cladistic revolution in evolutionary

biology that occurred in the 1970's and after. The point of interest here

is the use of a principle of phylogenetic parsimony to infer phylogenetic

relationships among species, based on data concerning their similarities

and differences (see, for example, Eldredge and Cracraft 1980). Although

philosophers often say that parsimony is an ill-defined concept, its meaning

in the context of the problem of phylogenetic inference is pretty clear.

The hypotheses under consideration all specify phylogenetic trees. The

most parsimonious tree is the one that requires the smallest number of

changes in character state in its interior to produce the observed distribution

of characteristics across species at the tree’s tips.

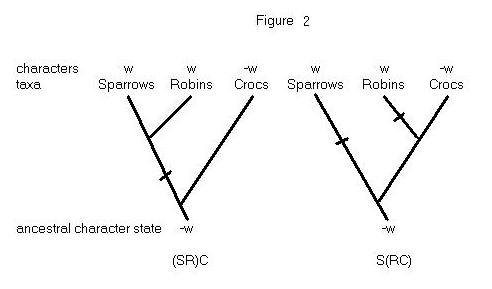

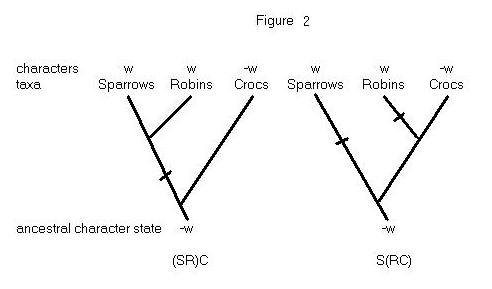

Consider the problem of inferring how sparrows, robins, and crocodiles

are related to each other. Two hypotheses that might be considered are

depicted in Figure 2. The (SR)C hypothesis says that sparrows and robins

have a common ancestor that is not an ancestor of crocs; the S(RC) hypothesis

says that it is robins and crocs that are more closely related to each

other than either is to sparrows. Now consider an observation -- sparrows

and robins have wings, while crocodiles do not. Which phylogenetic hypothesis

is better supported by this observation?

If winglessness is the ancestral condition, then (SR)C is the more parsimonious

hypothesis. This hypothesis can explain the data about tip taxa by postulating

a single change in character state on the branch with a slash through it.

The S(RC) hypothesis, on the other hand, must postulate at least two changes

in character states to explain the data. The principle of cladistic parsimony

says that the observations favor (SR)C over S(RC). Notice that the parsimoniousness

of a hypothesis is assessed not by seeing how many changes it says actually

occurred, but by seeing what the minimum number of changes is that

the hypothesis requires. (SR)C is the more parsimonious hypothesis because

it entails a lower minimum.

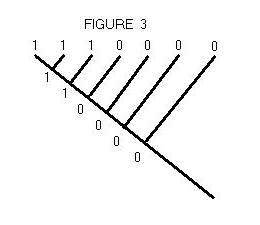

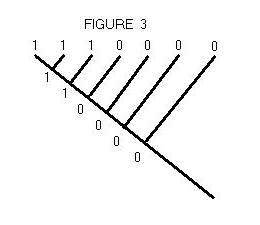

Although cladistic parsimony first attracted the attention of biologists

because it helps one reconstruct genealogical relationships, there is a

second type of problem that parsimony is used to address. If you know what

the genealogy is that connects a set of contemporaneous species, and you

observe what character states these tip species have, you can use parsimony

to reconstruct the character states of ancestors. This inference is illustrated

in Figure 3. Given the character states (1's and 0's) of tip species, the

most parsimonious assignment of character states to interior nodes is the

one shown.

Parsimony is now regarded in evolutionary biology as a reasonable way

to infer phylogenetic relationships. Whether it is the absolutely best

method to use, in all circumstances, is rather more controversial. And

the foundational assumptions that need to be in place for parsimony to

make sense as an inferential criterion are also a matter of continuing

investigation.

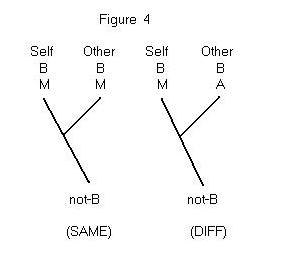

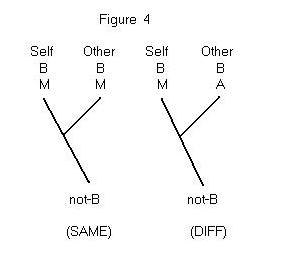

The reason I’ve explained the basic idea behind cladistic parsimony

is that it applies to the problem of other minds, when Self and Other are

genealogically related. Suppose both Self and Other are known to have behavioral

characteristic B, and that Self is known to have mental characteristic

M. The question is whether Other should be assigned M as well. As before,

I’ll assume that M is sufficient for B, but not necessary (an alternative

internal mechanism, A, also could produce B). The two hypotheses we need

to consider are depicted in Figure 4. If the root of the tree has the characteristic

not-B ( that is, the trait of having neither-M-nor-A), then the (Same)

hypothesis is more parsimonious than the (Diff) hypothesis. It is consistent

with (Same) that the postulated similarity between Self and Other is a

homology;

it is possible that the most recent common ancestor of Self and Other had

M, and that M was transmitted unchanged from this ancestor to the two descendants.

The (Same) hypothesis, therefore, requires only a single change in character

state, from neither-M-nor-A to M. In contrast, the (Diff) hypothesis requires

at least two changes in character state.

De Waal (1991, 1999) presents this cladistic argument in defense of

the idea that parsimony favors anthropomorphism -- we should prefer

the hypothesis that other species have the same mental characteristics

that we have when they exhibit the same behavior (see also Sober 1998b

and Shapiro forthcoming). This parsimony argument goes against Morgan’s

canon, just as Morgan foresaw. De Waal adds the reasonable proviso that

the parsimony inference is strongest when Self and Other are closely related.

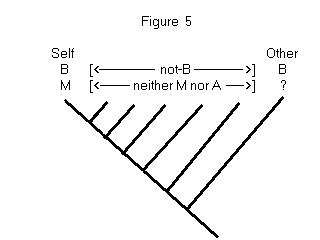

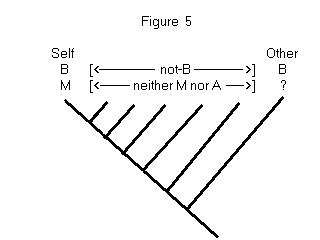

There is another proviso that needs to be added to this analysis, which

is illustrated in Figure 5. As before, Self and Other are observed to have

behavioral trait B. We know by assumption that Self has the mental trait

M. And the question, as before, is what we should infer about Other --

does it have M or A? The new wrinkle is that there are additional species

depicted in the tree, ones that are known to lack B. The genealogical relationships

that connect these further species to Self and Other entail that the most

parsimonious hypothesis is that trait B evolved twice. It now makes no

difference in parsimony whether one thinks that Other has M or A. What

this shows is that parsimony favors anthropomorphism about mentalistic

properties only when the behaviors in question are thought to be homologous.

This point has implications about a kind of question that often arises

in connection with sociobiology. Parsimony does not oblige us to think

that “slave-making” in social insects has the same psycho-social causes

as slave-making in humans (or that rape in human beings has the same proximate

mechanism as “rape” in ducks). The behaviors are not homologous,

so there is no argument from parsimony for thinking that the same proximate

mechanisms are at work. This is a point in favor of the parsimony analysis

-- cladistic parsimony explains why certain types of implausible inference

really are implausible. Parsimonious anthropomorphism is not the

same as naive anthropomorphism.

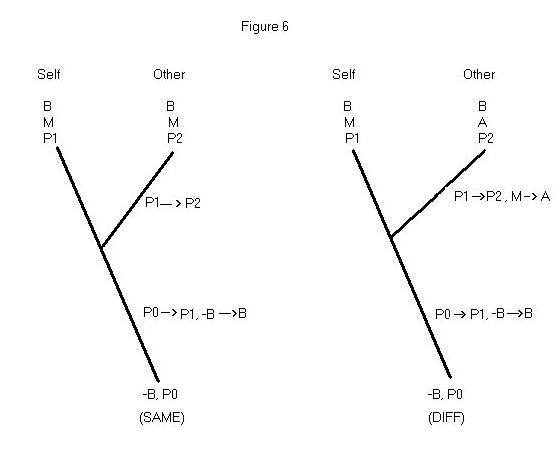

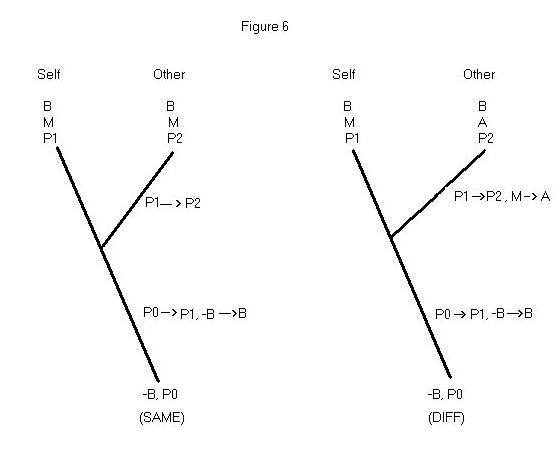

Just as extrapolation from Self to Other can be undermined by behavioral

information about other species, extrapolation also can be undermined by

neurological information about Self and Other themselves. If Self has mental

state M by virtue of being in physical state P1, what are we to make of

the discovery that Other has physical state P2 (where P1 and P2 are mutually

exclusive)? If we accept functionalism’s assurance that mental state M

is multiply realizable, this information does not entail that Other cannot

be in mental state M; after all, P2 might just be a second supervenience

base for M. On the other hand, P2 might be a supervenience base for alternative

state A, and not for M at all. This inference problem is illustrated in

Figure 6. For simplicity, let’s suppose that P1 and P2 each suffice for

B. P1 produces B by way of mental state M; it isn’t known at the outset

whether P2 produces B by way of mental state M, or by way of alternative

state A. We assume that the ancestor at the root of the tree did not exhibit

the behavior in question, and that this ancestor had trait P0.

The conclusion we must reach is that the two hypotheses in Figure 6

are equally parsimonious. (Same) construes M as a homology shared by Self

and Other, and P1 and P2 as two of its alternative supervenience bases.

(Same) requires two changes to account for the characteristics at the tips;

either P1 or P2 evolved long ago from an ancestor who had P0, and then

either P1 changed to P2, or P2 changed to P1. Notice that the fact that

not-B changes to B in the same branch in which P0 changes to either P1

or P2 does not count as two changes, since the first entails the

second. The (Diff) hypothesis also requires two changes. The figure depicts

these changes as occurring from P0 to P1 and then from P1 to P2. Of course,

(Diff) also describes a change from M to A on the branch leading to Other,

but this doesn’t count as a change that is distinct from the one on that

branch that goes from P1 to P2. Thus, the discovery of relevant neurophysiological

differences can throw a monkey wrench into the machinery of parsimonious

anthropomorphism.

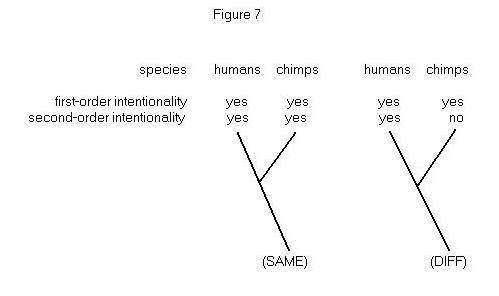

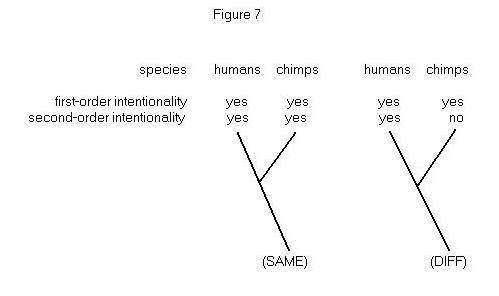

As a final exercise in understanding how the machinery of phylogenetic

parsimony applies to the problem of extrapolating from Self to Other, let’s

consider the current controversy in cognitive ethology concerning whether

chimps have a theory of mind (see the papers collected in Carruthers and

Smith 1995 and the critical review by Heyes 1998). It is assumed at the

outset that chimps have what Dennett (1989) terms first-order intentionality;

they are able to formulate beliefs and desires about the extra-mental objects

in their environment. The question under investigation is whether they,

in addition, have second-order intentionality. Do they have the ability

to formulate beliefs and desires about the mental states of Self and Other?

Adult human beings have both first- and second-order intentionality. Do

chimps have both, or do they have first-order intentionality only?

Figure 7 provides one cladistic representation that this question might

be given. I assume that the ancestral condition is the absence of both

types of intentionality. The point to notice is that parsimony considerations

do not discriminate between the two hypotheses. The (SAME) hypothesis and

the (DIFF) hypothesis both require two changes in the tree’s interior –

first- and second-order intentionality must each evolve at least once.

The principle of parsimony in this instance tells us to be agnostic, and

so disagrees with Morgan’s canon, which tells us to prefer (DIFF) (Sober

1998b).

Why does the problem formulated in Figure 7 lead to a different conclusion

from the problem represented in Figure 4 in terms of the two states M and

A? In the latter, we have a parsimony argument that favors extrapolation;

in the former, we find that extrapolating is no more and no less parsimonious

than not extrapolating. Notice that Figure 4 mentions the behavior B, which

internal mechanisms M and A are each able to produce. Figure 7, however,

makes no mention of the behaviors that first- and second-order intentionality

underwrite. This is the key to understanding why the analyses come out

differently.

The point behind Figure 4 is this: if two species exhibit a homologous

behavior, each of them must also possess an internal mechanism (M or A)

for producing the behavior. It is more parsimonious to attribute the same

mechanism to both Self and Other than to attribute different mechanisms

to each. The situation depicted in Figure 7 is different. First-order intentionality

presumably allows organisms that have it to exhibit a range of behaviors

B1. When a species that has first-order intentionality evolves second-order

intentionality as well, this presumably augments the behaviors that organisms

in the species are able to produce; the repertoire expands to B1&B2.

If human beings exhibit B1 because they have first-order intentionality,

we parsimoniously explain the fact that chimps also exhibit B1 (if they

do) by saying that chimps have first-order intentionality. However, there

is no additional gain in parsimony to be had from attributing second-order

intentionality to them as well, unless we also observe them producing the

behaviors in B2. This is why cognitive ethologists are entirely correct

in thinking that they need to identify a behavior that chimps will produce

if they have second-order intentionality, but will not produce if they

possess first-order intentionality only. Identifying a behavior of this

sort permits an empirical test to be run concerning whether chimps have

a theory of mind. Phylogenetic parsimony does not tell us what to expect

here. This example illustrates another respect in which parsimonious anthropomorphism

differs from naive anthropomorphism. Cladistic parsimony does not provide

a blanket endorsement of extrapolation; rather, the principle says that

one should extrapolate when and only when this increases the parsimony

of the total phylogenetic picture.

Although the representation of the problem in Figure 7 is flawed because

it does not indicate which behaviors the two species are able to produce,

it does have the virtue of coding the mentalistic traits more perspicuously.

We should not code the possession of both first- and second-order intentionality

as “M” and the state of having first- but not second-order intentionality

as “A.” This obscures the fact that M is really two traits and that M and

A have a common element. The fact that a cladistic analysis is sensitive

to how the data are coded is not a special consequence of applying parsimony

considerations to traits that are mentalistic; it is a universal feature

of the methodology.

III.

Why should we trust the parsimony arguments just described? Is parsimony

an inferential end in itself or is there some deeper justification for

taking parsimony seriously? In the present circumstance at least, there

is no need to regard parsimony as something that we seek for its own sake.

My suggestion is that parsimony matters in problems of phylogenetic inference

only to the extent that it reflects likelihood (Sober 1988). Here

I am using the term “likelihood” in the technical sense introduced by R.A.

Fisher. The likelihood of a hypothesis is the probability it confers on

the observations, not the probability that the observations confer on the

hypothesis. The likelihood of H, relative to the data, is Pr(Data ½

H), not Pr(H ½ Data).

To see how the likelihood concept can be brought to bear on cladistic

parsimony, let’s consider Figure 2. It can be shown, given some minimal

assumptions about the evolutionary process, that the data depicted in that

figure are made more probable by the (RS)C hypothesis than they are by

the R(SC) hypothesis (Sober 1988). These assumptions are as follows:

-- heritability: Character states of ancestors and descendants

are positively correlated. That is, Pr(Descendant has a wing ½

Ancestor has a wing) > Pr(Descendant has a wing ½

Ancestor lacks a wing).

-- chance: All probabilities are strictly between 0 and 1.

-- screening-off: Lineages evolve independently of each other,

once they branch off from their most recent common ancestor.

I think that most biologists would agree that these assumptions hold

pretty generally. The first of them does not say that descendants

probably end up resembling their ancestors. The claim is not that

stasis is more probable than change -- that Pr(Descendant has a wing ½

Ancestor has a wing) > Pr(Descendant lacks a wing ½

Ancestor has a wing). Rather, the claim is that if a descendant has a wing,

that this result would have been more probable if its ancestor had had

a wing than it would have been if its ancestor had lacked a wing. This

is equivalent to saying that the trait in question has nonzero heritability.

Perhaps the only assumption open to serious question is the last one --

after all, there are ecological circumstances in which a lineage’s evolving

a trait influences the probability that other contemporaneous lineages

will do the same. But even here, the assumption of perfect independence

could be weakened without materially affecting the qualitative conclusions

I want to draw. And in many circumstances, this assumption is a reasonable

idealization. What we have here is a result that Reichenbach (1956) proved

in connection with his principle of the common cause (Sober 1988)

.

Not only does likelihood provide a framework for understanding the role

of parsimony considerations in phylogenetic inference; it also allows us

to address the Self and Other problem depicted in Figure 4. The assumptions

listed above entail the following inequality:

(P) Pr(Self has M ½ Other has M) > Pr(Self

has M ½ Other has A).

Provided that Self and Other are genealogically related, there is a

likelihood justification for anthropomorphism. The observation that Self

has M is rendered more probable by the hypothesis that Other has M than

by the hypothesis that Other has A. Such differences in likelihood are

generally taken to indicate a difference in support -- the observation

favors the first hypothesis over the second (Royall 1997, Sober 1999).

Turning to the concept of heritability, let’s consider the factors that

influence the values of conditional probabilities that have the form Pr(Descendant

has ±M ½ Ancestor has ±M). One natural way to

understand such probabilities, whether they apply to individual organisms

or to species, is to think of the lineage linking ancestor to descendant

as having a given, small probability of changing from M to A in any brief

moment of time, and another, possibly different, small probability of changing

in the opposite direction in that same small instant of time. Then the

probability that a lineage ends in one state, given that it begins in another,

will be a function of those “instantaneous” probabilities of change and

of the amount of time that there is in the lineage. The standard analysis

of these lineage transition probabilities entails that Pr(Descendant has

M ½ Ancestor has M) is close to 1 when the amount

of time is very small and that

Pr(Descendant has M ½ Ancestor has M) = Pr(Descendant

has M ½ Ancestor has A)

when the amount of time separating ancestor and descendant is infinite

(Sober 1988). It follows that the inequality stated in (P) is maximal when

Self and Other are very closely related and becomes smaller as Self and

Other become more distantly related. This model confirms what De Waal suggests

-- that anthropomorphism is on firmer ground for our near relatives than

it is for those species to whom we are related more distantly. This point

need not be added as an independent constraint on parsimony arguments (which

have no machinery for taking account of recency of divergence), but flows

from the likelihood analysis itself.

A probability framework also throws light on the ideas discussed in

connection with Figure 5. In this case there is no difference in parsimony

between assigning M to Other and assigning A to Other, if the behavior

B shared by Self and Other is a result of convergence.

What probabilistic assumptions might underwrite this conclusion? The

assumptions just discussed that suffice to justify proposition (P) are

not enough. What is needed, in addition, is some way to compare the probability

of M’s arising and of A’s arising in lineages that have neither. If these

probabilities are the same, then the likelihoods of the two hypotheses

will be the same. And if no assumption about how these probabilities are

related can be endorsed, then

no extrapolation can be endorsed. Likelihood considerations do not oblige

us to think that slave-making in the social insects is driven by the same

internal mechanisms that lead human beings to enslave each other.

IV.

Genealogical relatedness suffices to justify a likelihood inference

from Self to Other, if the Reichenbachian assumptions that I described

hold true. However, is genealogical relatedness necessary for this

extrapolation to make sense? Proposition (P) says that Self and Other are

correlated with respect to trait M. What could induce this correlation?

Reichenbach argued that whenever there is a correlation of two events,

either the one causes the other, or the other causes the one, or the two

trace back to a common cause. Considerations from quantum mechanics suggest

that this isn’t always the case (Van Fraassen 1982a), and doubts about

Reichenbach’s principle can arise from a purely classical point of view

as well (Van Fraassen 1982b; Sober 1988; Cartwright 1989). However, if

we are not prepared to suppose that mentalistic correlations between Self

and Other are brute facts, and if my having M does not causally influence

whether Other has M (or vice versa), then Reichenbach’s conclusion

seems reasonable, if not apodictic – if Self and Other are correlated,

this should be understood as arising from a common cause.

Genealogical relatedness is one type of common cause structure. It can

induce the correlation described in (P) by having ancestors transmit genes

to their descendants, but there are

alternatives that we need to recognize. To begin with, parents exert

nongenetic

influences on their offspring through teaching and learning. For example,

children have a higher probability of speaking Korean if their parents

speak Korean than if their parents do not, but this is not because there

is a gene for speaking Korean. And there are nongenetic connections between

parents and offspring that do not involve learning, as when a mother transmits

immunity to her children through her breast milk.

Correlations between Self and Other also can be induced by common causes

when Self and Other are not genealogically related. If students resemble

their teachers, then students of the same teacher will resemble each other.

Here learning does the work that genetic transmission is also able to do.

Similarly, Self and Other can be correlated when they are influenced by

a common environmental cause that requires no learning. For example, if

influenza is spreading through one community, but not through another,

then the fact that I have the flu can be evidence that Other does too,

if the two of us live in the same community.

I list these alternatives, not because all of them apply with equal

plausibility to the problem of other minds, but to give an indication of

the range of alternatives that needs to be considered. The question is

whether there are common causes that impinge on Self and Other that induce

the correlation described in (P); if there are, then there will be a likelihood

justification for extrapolating from Self to Other.

V.

What, exactly, does cladistic parsimony and its likelihood analysis

tell us about the problem of other minds? When I cry out, wince, and remove

my body from an object inflicting tissue damage, this is (usually) because

I am experiencing pain. Is the same set of behaviors, when produced by

other organisms, whether they are human or not, evidence that they feel

pain? This hypothesis about Other is more parsimonious, and it is

more likely, if Reichenbachian assumptions about common causes are correct.

Does that completely solve the problem of other minds, or does there remain

a residue of puzzlement?

One thing that is missing from this analysis is an answer to the question

-- how much evidence does the introspected state of Self provide

about the conjectured state of Other? I have noted that the likelihoods

in proposition (P) become more different as Self and Other become more

closely related, but this comparative remark does not entail any quantitative

benchmarks. It is left open whether the observations strongly favor

one hypothesis over the other, or do so only weakly.

Another detail that I have not addressed is how probable it is

that Other has M. If I have M and Self and Other both exhibit behavior

B, is the probability greater than 0.5 that Other has M as well? The discussion

here helps answer that question, in that principle (P) is equivalent

to the claim that Self’s having M raises the probability that Other has

M. Whatever the prior probability is that Other has M, the posterior probability

is greater. Whether additional information can be provided that allows

the value of that posterior probability to be estimated is a separate question.

A genealogical perspective on the problem of other minds helps clarify

how that problem differs from the behavior-to-mind problem discussed at

the outset. At first glance, it might appear that it doesn’t matter to

the problem of other minds whether the other individual considered is a

human being, a dog, a Martian, or a computer. In all these cases, the question

can be posed as to whether knowledge of one’s own case permits extrapolation

to another system that is behaving similarly. We have seen that these different

formulations receive different answers. I share ancestors with other human

beings, and I share other, more remote, ancestors with nonhuman organisms

found on earth. However, if creatures on other planets evolved independently

of life on earth, then I share no common ancestors with them. In this case,

extrapolation from Self to Other does not have the justification I have

described. I would go further and speculate that it has no justification

at all. This doesn’t mean that we are never entitled to attribute mental

states to such creatures. What it does mean is that we must approach such

questions as instances of the purely third-person behavior-to-mind inference

problem. Similar remarks apply to computers. When they behave similarly

to us (perhaps by passing an appropriate Turing test), we may ask what

causes them to do so. We have no ancestors in common with them; rather,

we have constructed them so that they produce certain behaviors. However,

this provides no reason to think that the proximate mechanisms behind those

behaviors resemble those found in human beings. For both Martians and computers,

extrapolation from one’s own case will not be justified. Indeed, this point

applies to organisms with whom we do share ancestors, if the behaviors

we have in common with them are not homologies. And even when Self and

Other do share a behavioral homology, if Self and Other are known to deploy

different neural machinery for exhibiting that behavior, the extrapolation

of M from Self to Other is undermined.

A probabilistic representation of the problem of other minds shows that

the usual objection to extrapolating from Self to Other is in fact irrelevant,

or, more charitably, that it rests on a factual assumption about the world

that we have no reason to believe. The question is not whether introspected

information about one’s own mind provides lots of data or only a little.

Rather, the issue is how strong the correlation is between Self and Other.

Consider two urns that are filled with balls; each ball has one color,

but the frequencies of different colors in the urns are unknown. The urns

may be similar or identical in their compositions, or they may be very

different. If I sample one ball from the first urn and find that it is

green, does this provide much information for making a claim about the

second urn’s composition? If I sample a thousand balls from the first urn,

does this allow me to say any more about the second urn? Everything depends

on how the two urns are related. If they are independent, then samples

drawn from the first, whether they are small or large, provide no information

about the second. But if they are not independent, then even a small sample

from the first may be informative with respect to the second.

There is nothing wrong with asking whether my introspected knowledge

of Self

extrapolates to a well-supported conclusion about Other. But the skeptical

assertion

that it does not involves a factual claim about the world. Claims of independence

are no more a priori than are claims of correlation. Perhaps a special

creationist would want to assume that different species are uncorrelated,

but no evolutionist would think that this is so. And creationists and evolutionists

agree that all human beings are genealogically related, so here

it is especially problematic to assume that Self and Other are independent.

The argument I have presented is intended to show how certain propositions

are justified; I have not addressed the question of whether people

are justified in believing this or that proposition. My own mental state

can be an indicator of the mental states of others, whether or not I know

that this is true, or understand why it is true. But what is required

for people to be justified in extrapolating from Self to Other? Must they

have reason to believe that principle (P) is satisfied? Or is it enough

that (P) is satisfied? I will not enter into this epistemological

thicket concerning internalist versus externalist views of justification,

except to note that the ideas surveyed here are relevant across a range

of views that one might take. For the externalist, it is enough that a

common cause structure has induced the correlation described by proposition

(P). For the internalist, the point of interest is that individuals are

entitled to extrapolate from Self to Other if they already have the justified

belief that a common cause structure has rendered (P) true.

Is it possible for me to figure out that proposition (P) is true, if

I don’t already

know whether Other has M or A? Surely I can. I can tell whether M is

heritable by looking at still other individuals who are known to have either

M or A and see how they are genealogically related. But is it possible

for me to determine that (P) is true without knowing anything about which

individuals (other than myself) have M and which have A? Without this information,

how can I determine whether the traits are heritable? To be sure, I can

tell whether the behavior B is heritable, but what of the two possible

internal mechanisms that can cause individuals to exhibit B? Well, knowledge

in some strong philosophical sense is probably unnecessary, but perhaps

judgments about heritability require one to have reasonable opinions, however

tentative, about which individuals have which traits. Even if that were

so, the solution to the problem of other minds that I have suggested would

not be undermined. The incremental version of the problem, recall, asks

whether knowledge of my own case makes a difference in the characteristics

I attribute to others. It isn’t required that I conceive of myself as beginning

with no knowledge at all concerning the internal states of others.

We learned from I.J. Good that there is no saying whether a black raven

confirms the generalization that all ravens are black unless one is prepared

to make some substantive background assumptions. The mere observation that

the object before you is a black raven is not enough. The same point, applied

to the problem of other minds, is that the mere observation that Self and

Other share B and that Self has M is not enough. Further assumptions are

needed to say whether these observations confirm the hypothesis that Other

has M. Recognizing this point in the ravens paradox does not lead inevitably

to skepticism, and it should not have that effect in the case of self-to-other

inference. The problem of other minds should not be shackled with the “methodological

fiction” that Hempel imposed on the ravens paradox. When the fetters are

broken, the problem of other minds turns into the problem of searching

out common causes.

References

Allen, C. and Bekoff, M. (1997): Species of Mind – the Philosophy

and Biology of Cognitive Ethology. Cambridge: MIT Press.

Carruthers, P. and Smith, P. (1995): Theories of Theories of Mind.

Cambridge: Cambridge University Press, pp. 277-292.

Cartwright, N. (1989): Nature’s Capacities and Their Measurement.

Oxford: Oxford University Press.

De Waal, F. (1991): “Complementary Methods and Convergent Evidence in

the Study of Primate Social Cognition.” Behaviour 118: 297-320.

De Waal, F. (1999): “Anthropomorphism and Anthropodenial – Consistency

in our Thinking about Humans and Other Animals.” Philosophical Topics,

forthcoming.

Dennett, D. (1989): “Intentional

Systems in Cognitive Psychology -- the ‘Panglossian Paradigm’ Defended.”

In The Intentional Stance. Cambridge: MIT Press, pp. 237-268.

Duhem, P. (1914): The Aim and Structure of Physical Theory. Princeton:

Princeton University Press. 1954.

Eldredge, N. and Cracraft, J. (1980): Phylogenetic Patterns and the

Evolutionary Process. New York: Columbia University Press.

Enç, B. (1995): “Units of Behavior.” Philosophy of Science 62:

523-542.

Forster, M. and Sober, E.

(1994): “How to Tell When Simpler, More Unified, or Less Ad Hoc

Theories Will Provide More Accurate Predictions." British Journal for

the Philosophy of Science 45: 1-36.

Forster, M. and Sober, E.

(2000) “Why Likelihood?” In M. Taper and S. Lee (ed.), The Evidence

Project, Chicago: University of Chicago Press.

Good, I.J. (1967): “The White

Shoe is a Red Herring.” British Journal for the Philosophy of Science

17: 322.

Good, I.J. (1968): “The White

Shoe Qua Herring is Pink.” British Journal for the Philosophy of Science

19: 156-157.

Hempel, C.G. (1965): “Studies

in the Logic of Confirmation.” in Aspects of Scientific Explanation

and Other Essays. New York: Free Press.

Hempel, C.G. (1967): “The

White Shoe -- No Red Herring.” British Journal for the Philosophy of

Science 18: 239-240.

Heyes, C. M. (1998): ‘Theory of Mind in Nonhuman Primates.’ Behavioral

and Brain Sciences 21, 101-148.

Kyburg, H. (1961): Probability

and the Logic of Rational Belief. Middletown, CT: Wesleyan University

Press.

Morgan, C.L. (1903): An

Introduction to Comparative Psychology (2nd ed.). London: Walter Scott.

Quine, W. (1953): “Two Dogmas of Empiricism.” In From a Logical Point

of View. Cambridge, MA: Harvard University Press. 20-46.

Reichenbach, H. (1956): The Direction of Time. Berkeley: University

of California Press.

Royall, R. (1997): Statistical

Evidence -- a Likelihood Paradigm. Boca Raton: Chapman and Hall/CRC.

Shapiro, L. (1999): “Presence

of Mind.” In V. Hardcastle (ed.), Biology Meets Psychology – Constraints,

Connections, and Conjectures. Cambridge: MIT Press.

Shapiro, L. (forthcoming):

“Adapted Minds.” In J. McIntosh (ed.), Naturalism, Evolution, and Intentionality.

Cambridge: MIT Press.

Sober, E. (1988): Reconstructing

the Past -- Parsimony, Evolution, and Inference. Cambridge: MIT Press.

Sober, E. (1998a ): “Black

Box Inference -- When Should an Intervening Variable be Postulated?” British

Journal for the Philosophy of Science 49: 469-498.

Sober, E. (1998b): “Morgan’s Canon.” In C. Allen and D. Cummins (eds.),

The

Evolution of Mind, New York: Oxford University Press, pp.224-242.

Sober, E. (1999): “Testability.” Proceedings and Addresses of the

American Philosophical Association73:

47-76. Also available at the following URL – http://philosophy.wisc.edu/sober.

Sober, E. and Barrett, M.

(1992): “Conjunctive Forks and Temporally Asymmetric Inference.” Australasian

Journal of Philosophy 70: 1-23.

Van Fraassen, B. (1982a):

“The Charybdis of Realism – Epistemological Implications of Bell’s Inequality.”

Synthese

52: 25-38.

Van Fraassen, B. (1982b) “Rational Belief and the Common Cause Principle,”

In R. McLaughlin

(ed.), What? Where? When? Why? Essays in Honor of Wesley Salmon.

Dordrecht: Reidel, pp. 193-209.

Notes

*. My thanks to Colin Allen,

Martin Barrett, Marc Bekoff, Tom Bontly, James Crow, Frans De Waal, Ellery

Eells, Mehmet Elgin, Berent Enç, Peter Godfrey-Smith, Daniel Hausman, Richard

Lewontin, David Papineau, Larry Shapiro, and

Alan Sidelle for useful comments on earlier drafts. I also benefitted from

discussing this paper at the London School of Economics and Political Science

and at University of Illinois at Chicago.